-

Viewpoint on 'IONS'

Viewpoint on 'Scientific Literacy'

- Proudly sponsored by

-

-

Plasmon Harvesting: A Route to Plasmonic Circuitry

What cannot be seen can often be felt, even in the case of physics. Invisible surface plasmons trapped inside a nanowire can now be detected by converting them into an electrical current.

-

Light Syringes

Scared of needles? Fear no more! A new injection technique could make the needle obsolete by replacing it with a flash of light.

-

Traffic Lights for Chemotherapy

Many cancer patients do not respond to chemotherapy and have to endure harmful side effects even when the treatment itself does not prove effective. A new technique could, as of the very first day, give the green or red light to continuing with the treatment.

Shades of 3D Vision

How does the brain recreate a 3D world from the 2D images the eyes capture? Recent evidence shows that shadows and light may be even more important than was originally thought when it comes to the recreation of a 3D world in the brain.

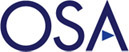

Illusion of depth in a flat image. Is the cube engraved or embossed on the screen? The brain uses the shading patterns to recreate the third dimension and therefore the illusion of depth. Whether you perceive the cube as being engraved or embossed depends on where your brain is assuming the light is coming from. In fact, with a little effort you can even train your brain to “see” either image on command.

The eyes’ retina only captures 2D images of the world — yet we clearly perceive a 3D world. Where does this extra dimension come from? The answer lies in the way the brain’s visual system combines the slightly different images from the right and left eye: the larger the apparent displacement of an object, the nearer it is assumed to be. You can easily experience this effect by placing your thumb a few inches in front of your nose. If you look at your thumb first with your left eye and then the right one, you will see that it appears to move; as you increase the distance between your thumb and your nose, your thumb will appear to move less.

Let us now consider a slightly more challenging situation: imagine you are looking at a photograph of a little house on a prairie. To add to the challenge, go ahead and shut one eye. You will notice that, even with one eye closed, you can still obtain vivid impressions of depth. This kind of 3D perception cannot rely on the stereoscopic comparison of the images captured by both eyes, but must make use of other cues such as the shading patterns in the picture. These shades can make an object appear concave or convex; however, they strongly rely on the knowledge of where the light source is.

![Are these images convex or concave? Examples of the pictures used by Mamassian and coworkers in the experiment where subjects were asked to interpret a 2D image as a 3D object. Surprisingly, our preferred lighting direction is not directly above our heads, but rather slightly shifted to the left [1, 2]. A first hint of this left preference came from art: for over two millennia painters have tended to light their scenes with a left lighting direction, with examples from Roman mosaics, to Renaissance, Baroque and Impressionism [1]. Some experiments by Mamassian and Goutcher have reinforced this idea [2]. According to Mamassian, “although surprisingly robust across observers, the origin of this left bias is still largely a mystery. It is difficult to explain it from an ecological point of view,” like the explanation that light is coming from above because the sun is above our heads, “and as such it challenges the view that we have evolved optimally for our environment.”](./content/v11/s7/opfocus_v11_s7_p2_250.jpg)

Are these images convex or concave? Examples of the pictures used by Mamassian and coworkers in the experiment where subjects were asked to interpret a 2D image as a 3D object. Surprisingly, our preferred lighting direction is not directly above our heads, but rather slightly shifted to the left [1, 2]. A first hint of this left preference came from art: for over two millennia painters have tended to light their scenes with a left lighting direction, with examples from Roman mosaics, to Renaissance, Baroque and Impressionism [1]. Some experiments by Mamassian and Goutcher have reinforced this idea [2]. According to Mamassian, “although surprisingly robust across observers, the origin of this left bias is still largely a mystery. It is difficult to explain it from an ecological point of view,” like the explanation that light is coming from above because the sun is above our heads, “and as such it challenges the view that we have evolved optimally for our environment.”

Mamassian and coworkers Gerardin and Kourtzi, performed a study to understand the interplay between illumination and 3D shape perception. The question was about the exact process followed by the brain in reconstructing the vivid impression of a 3D reality in our minds. Is the overall meaning interpreted in the first place, with the details, such as the illumination, taken into account only subsequently (top-down processing)? Or is it the other way around, with the illumination accounted for before the overall meaning of the scene is interpreted (bottom-up processing)?

Until now, the processing of 3D shapes was thought to occur in the brain’s visual system in a top-down manner. However, the evidence produced by Pascal Mamassian and coworkers shows that it may well be the other way around. It seems that the areas of the brain that process simple features of an image are activated when subjects process information about light, and that this level of processing then influences the 3D shape building in those brain areas that process more complex feature of the images, such as whether the image is convex or concave. According to Mamassian, "what is rather surprising is the finding that illumination is taken into account so early in visual processing. Up to now, the common assumption was that prior knowledge had to be used in a top-down fashion as if I were reasoning that light must be on the left of the image because I can see shadows on the right of the corresponding objects." Wilson Geisler from the University of Texas agrees that "the study by Mamassian and coworkers provides solid evidence that [the standard] view is too simple."

The surprising finding that 3D images are processed with a bottom-up approach came from looking at the subjects’ brain activity. Mamassian and coworkers designed a simple experiment in which subjects were presented with images lit from one of four possible light directions. These images were ambiguous: the same image could be interpreted as a convex ring or a concave one depending on where the subjects assumed light was coming from. When looking at these images, the subjects had to decide whether they had a concave or convex shape. During the experiment, the subjects’ brain activity was recorded through a neuroimaging technique called functional magnetic resonance (fMRI). When a brain area is more active it consumes more oxygen, and to meet this increased demand the blood flow to the active area increases. fMRI detects which parts of the brain show these signs of neural activity.

The study by Mamassian and coworkers brings a new possible insight into the way the brain constructs 3D reality. In perceiving 3D shapes from shading patterns, the brain goes from a simple processing level, by understanding where light is coming from, to a more complex level in which the previous information of light is used to decide the 3D shape of an image. This finding contradicts the current visual theories of the brain and shows that perhaps its visual system works in a bottom-up fashion. "In retrospect," Geisler concludes, Mamassian’s finding that lightning is taken into account "in early visual areas is plausible. I think that the result obtained by Mamassian will motivate new models of shape from shading and perhaps new studies of neural activity in the primary visual cortex of primates."

[1] J. Sun & P. Perona, Where is the sun? Nat. Neurosci. 1, 183-184 (1998).

[2] P. Mamassian & R. Goutcher, Prior knowledge on the illumination position. Cognition 81, 81-89 (2001).

Joana Braga Pereira

2010 © Optics & Photonics Focus

JBP is currently working on her Doctoral Thesis in Neuroimaging at the University of Barcelona, Spain.

Peggy Gerardin, Zoe Kourtzi & Pascal Mamassian, Prior knowledge of illumination for 3D perception in the human brain, PNAS (2010) 107, 16309–16314 (link).